This is the third blog post for NanoMatriX’s AI Learning and Awareness series.

Decision-makers and business owners are finally understanding the importance of using AI for their business. But this enlightenment is coming with a cost to their business. The surge in new AI models can be daunting and overwhelming, causing decision paralysis among corporate leaders.

Despite the continued enthusiasm for the transformative power of AI, where 85% of leaders plan to boost AI spending in 2024, there is a widening revenue growth gap between early adopters and those lagging. 90% of C-suite executives are still waiting for AI to move beyond its hype or are experimenting with small AI usage ways. Adopting a wait-and-see strategy may prove detrimental in the long run.

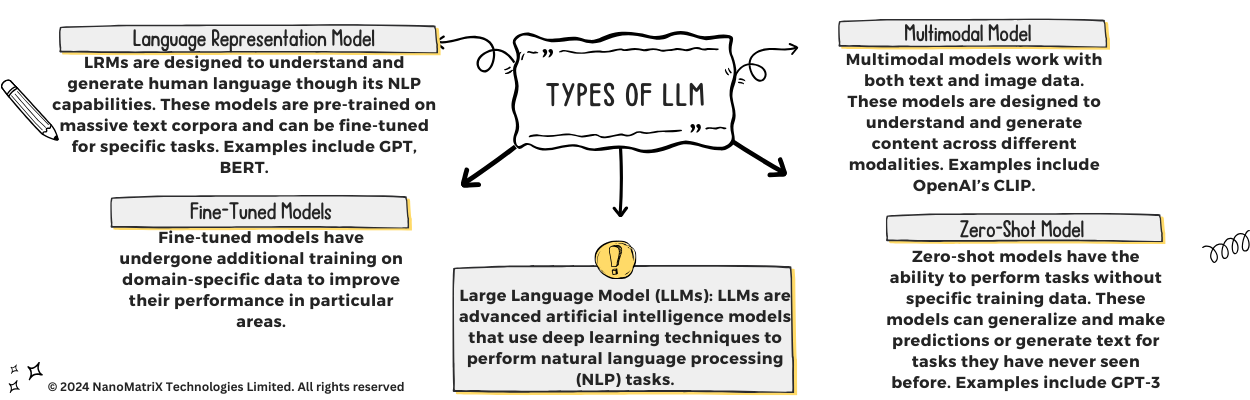

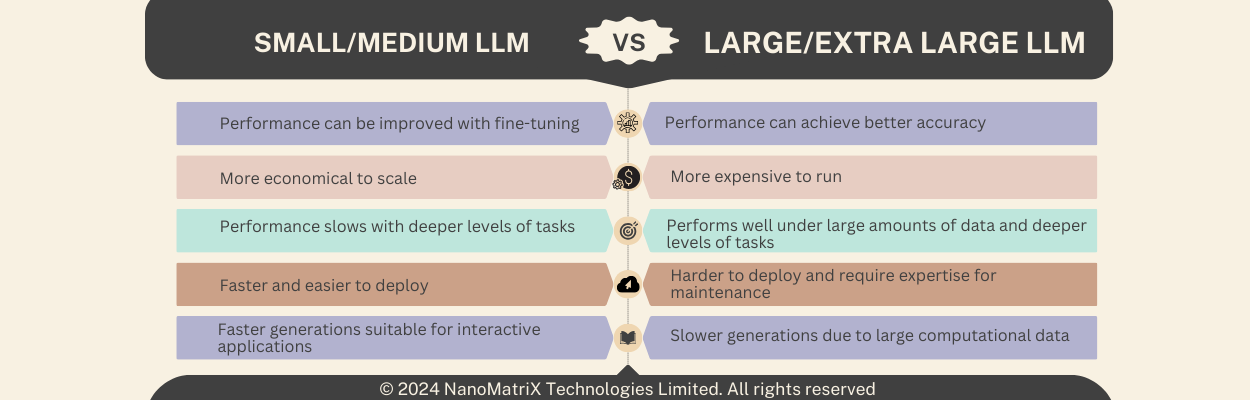

Opting for an optimal large language model (LLM) for your business requires striking a delicate balance between scale and specificity. Large AI models exhibit strong, out-of-the-box performance across a diverse array of applications, boasting enhanced reasoning capabilities. However, this comes at a premium cost. On the other hand, smaller, specialized models tend to be more cost-effective but pose challenges in terms of training and maintenance, particularly when addressing a multitude of use cases.

Numerous companies delving into AI experimentation and usage have devised proofs-of-concept (POCs) utilizing large models, only to encounter challenges in economically scaling these models to align with their targeted business objectives. Consequently, project teams are actively pursuing alternatives that are both more efficient and cost-effective for seamless integration into full-scale production for their business.

This article provides a streamlined framework designed to assist business leaders in understanding and choosing the best AI models for their businesses. We will guide you in evaluating your organization’s specific requirements, delving into available models and their features, selecting the optimal sourcing option, and addressing considerations for scalability. These strategic steps aim to assist you in pinpointing the most fitting Large Language Model (LLM) solution for your enterprise.

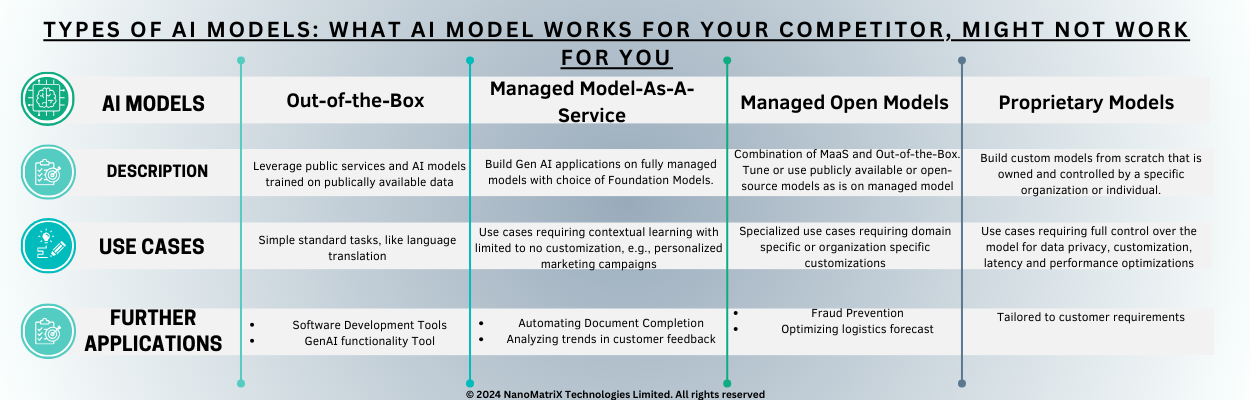

Types of AI Models:

An AI model is a mathematical model that applies one or more algorithms to data to recognize patterns, make predictions, or make decisions without human intervention. There are four types of AI models:

1. Out-of-the-Box AI Model:

An “out-of-the-box” AI model refers to a pre-trained or pre-configured artificial intelligence model that is ready for use without requiring extensive customization or additional training. These models are designed to offer general-purpose functionality and can perform general tasks without the need for fine-tuning user-specific data. They come with pre-learned features and parameters, making them accessible and usable with minimal effort or expertise

Out-of-the-box Models Function At The Expense of Your Business:

These models are often trained on large and diverse datasets, allowing them to exhibit a degree of versatility in handling various tasks within a particular domain. But these Out-of-the-box models are not profitable for your business in the long run. This is because these models are trained on other organizations’ data, not your own, so they can’t predict the entire course of functioning specific to your business. These AI models can’t generate specific analysis for your business.

2. Managed Model-As-A-Service:

“Managed Model as a Service” refers to a cloud-based solution that streamlines the deployment and management of machine learning models. This service reduces the complexities associated with infrastructure management in the machine learning lifecycle. With a managed model as a service, users can deploy, host, and maintain machine learning models without delving into the complexities of underlying infrastructure, server management, or scaling concerns.

These services provide a fully managed environment, enabling users to focus on model development and training. Key features often include version control, A/B testing, monitoring tools, and seamless integration with other cloud services. By abstracting infrastructure complexities, managed models as service offerings empower organizations to efficiently deploy and manage machine learning models in production, enhancing scalability and easing the overall operational burden.

3. Managed Open Models:

Managed open model employs the usage of open-source publicly available models for your business after fine-tuning them. Their specialized use case includes requiring domain-specific or organization-specific customizations to an open-source model.

4. Proprietary Model:

A proprietary AI model refers to an artificial intelligence model that is owned and controlled by a specific organization or individual. Unlike open-source models, which are publicly accessible and can be freely modified, a proprietary AI model is characterized by its closed nature, with the underlying algorithms, architecture, and parameters being proprietary and strictly not disclosed to the public.

Proprietary AI models are often developed by companies as part of their intellectual property and competitive advantage. The organizations that create these models retain exclusive rights to their use, modification, and distribution. Users or clients may have access to the functionalities of the model through APIs or services provided by the owning organization, but they do not have visibility or control over the internal workings of the model.

Proprietary AI models are the best for your business because they are trained on your specific data. Still, you should be careful in choosing the best AI solution provider because you will need that model provider for all of your updates and support needs.

NanoMatriX Technologies is one of the best AI solution providers for proprietary because we know the risks AI misuse poses and we are equipped with advanced technology, and cyber-secure and data-protected solutions tailored for your specific business. We have several protocols and procedures in place for handling confidential data of enterprises and government agencies and we are compliant with ISO 27001:2022, ISO 27701:2019, ISO 27017:2015, ISO 27018:2019, and ISO 9001. This ensures your sensitive data is safe in our AI solutions.

Types of AI and ML Operations:

1. MLOps

Machine Learning Operations (MLOps) is a fundamental component of machine learning (ML) engineering, dedicated to optimizing the journey of deploying ML models into production and subsequently overseeing their maintenance and monitoring. MLOps offers a standardized platform for developers to explore, experiment, build, and iterate with minimal overhead and strong traceability.

At its core, MLOps relies on a collaborative and streamlined strategy within the ML development lifecycle. This approach emphasizes the collaboration of people, processes, and technology to enhance the efficiency of end-to-end activities involved in the development, construction, and operation of ML workloads.

2. Foundation Model Ops

Foundation Model Operations (FMOps) consist of the platform, tools, and best practices empowering businesses to harness prevailing generative AI technologies for tailored business objectives. FMOps equip developers with versatile tools to engage with data, APIs, and FMs (Foundation Models) in a standardized, replicable, and observable fashion.

Simultaneously, it enables business users to derive insights and elevate business units. The distinctive aspect of FMs lies in their size and broad utility, setting them apart from traditional ML models that are typically designed for specific tasks such as sentiment analysis, image classification, and trend forecasting.

3. Large Language Model Ops

The large Language Model Operations (LLMOps) platform helps data scientists and software engineers with a collaborative environment designed to streamline iterative data exploration. It offers real-time co-working features for tracking experiments, rapid engineering, and managing models and pipelines. Additionally, the platform facilitates controlled transitions for model deployment and management.

LLMOps is, in simple terms, the set of principles that maintains and instructs the LLMs.

Choosing The Right AI Model For Your Business:

1. Define What You Need:

To maximize AI’s positive impact on your business, start by pinpointing the exact issue you want to tackle. Whether it’s boosting business intelligence with an AI chatbot, hastening digital transformation via AI-generated code, or refining enterprise search with a multilingual embedding model, a clear understanding of your needs is key. Once you’ve got that clarity, sketch out a strategy integrating AI solutions tailored to your requirements.

Identify one or more focused, task-oriented use cases for your problem. Keep in mind that each use case might call for different types of LLMs to achieve your desired outcomes.

For specific use cases where complex reasoning isn’t essential, opting for smaller models – perhaps with slight adjustments through fine-tuning – is favored. This choice is driven by factors such as reduced latency and cost efficiency. Conversely, in scenarios demanding complex reasoning across diverse topics, a larger model, enhanced by dynamically retrieved information, may deliver superior accuracy, justifying the higher associated costs.

2. Choose The Size of the AI Model:

The next step is to choose the size of the AI model that is suitable for your business needs. A common method for comparing model sizes is by examining the number of parameters they encompass. Parameters, serving as internal variables and weights, play a crucial role in influencing model training.

For instance, larger models having more parameters (>50 billion) are generally perceived as more powerful, and capable of tackling complex tasks. However, their increased power comes with the trade-off of requiring more computational resources for execution.

Although parameter count serves as a useful metric for model size, it doesn’t fully encapsulate the comprehensive dimensions of a model. Achieving a holistic view involves considering supporting architecture, training data characteristics (e.g., volume, variety, and quality), optimization techniques (e.g., quantization), transformer efficiencies, choice of learning frameworks, and model compression methods. The intricate variations in models and their creation processes complicate direct, apples-to-apples comparisons.

3. Review The Sourcing Options:

Type and size aren’t the sole considerations in model comparisons. After identifying several AI models, businesses must decide how to build or source the Large Language Models (LLMs) that form the foundation of their applications. LLMs use statistical algorithms trained on extensive data volumes to comprehend, summarize, generate, and predict text-based language, with building and training a high-performing model often incurring costs in millions.

Businesses typically have three main options for LLM sourcing:

- Developing an LLM in-house from scratch, utilizing on-premises or cloud computing resources.

- Choosing pre-trained, open-source LLMs available in the market.

- Employing pre-trained, proprietary LLMs through various cloud services or platforms.

Many organizations lack the necessary expertise, funding, or specific needs to justify building an LLM from scratch, making options 2 and 3 more practical and efficient for sourcing and training LLMs.

Comparing open-source and proprietary models can be challenging, especially when considering the required infrastructure for building a scalable AI application. While open-source tools may seem initially cost-effective, evaluating all criteria for implementation, launch, security, and support reveals a less convincing picture.

To comprehend the distinctions between open-source and proprietary models, we recommend assessing multiple criteria beyond upfront costs. This includes factors like time-to-solution, data origin, and data security options, the level of support, and the frequency of updates to the models.

For our reader’s ease, we have made a comparison table between Open-source and proprietary models:

| AI Model | Open-Source Model | Proprietary Model |

| Cost | Often free or low cost, but deployment costs are very high compared to proprietary models | Mostly requires a subscription or usage fee. The majority of proprietary AI solution providers offer free trials. |

| Support and Updates | Community-based support. Updates may be irregular. | Professional onboarding support and deployment engineers are available. Regular updates are provided. |

| Time-to-solution | High time-to-solution as it requires much expertise to integrate the model for a specific use case | Low time-to-solution due to the integrated AI offerings already tailored for the specific enterprise use case. |

| Data provenance | Data provenanceLack of transparency into the data used to train the models makes it difficult for enterprises to use in applications | Rigorous checks on source data ensure higher compliance satisfaction |

For enterprise applications demanding the best security and transparency, the most suitable choice is often pre-trained proprietary Large Language Models (LLMs). These models can be accessed through various APIs, integrated into partner cloud networks, or directly deployed on-premises. Opting for these models facilitates swift implementation and offers advanced capabilities, including NanoMatriX, to meet a large range of enterprise needs.

4. Scaling The AI Model

Once your proof-of-concept is successful, start scaling your chosen AI model to your entire business area. Transitioning from Proof of Concepts (POCs) to full production requires a comprehensive understanding of how scaling Large Language Model (LLM) applications impacts costs, performance, and Return on Investment (ROI). Beyond considering model type, size, and sourcing options, evaluating supporting infrastructure and model-serving capabilities is crucial. This approach is essential for aligning strategies with your objectives and achieving initial goals.

Begin by examining data residency requirements to determine whether a multi-tenant, hosted, API-based solution or a more secure, isolated solution is preferable based on your needs. Estimate the volume and traffic you intend to handle, considering factors like rate limits for hosted APIs that may impact user experience. Identify the required skills, from prompt engineering to model training, and ensure the availability of these skills in-house through upskilling or recruitment.

Consider the costs and benefits of different solutions, factoring in elements like adapting pre-trained models or utilizing retrieval-augmented generation for dynamic data. Assess your operational capability and evaluate whether to run AI deployments in-house or use externally managed services, considering platforms like AWS Bedrock or NanoMatriX.

In short, while selecting the right AI model, don’t rush, but invest time in understanding the cost, performance, and risks to confidently derive significant value from your LLM application.

About NanoMatriX Technologies Limited:

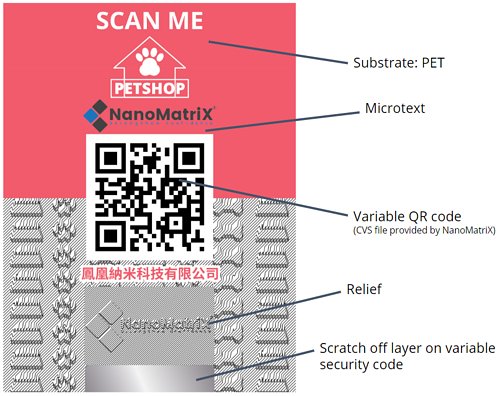

NanoMatriX Technologies Limited helps brands and organizations secure their data through its top-of-the-world and innovative AI-enabled data-protected, cyber-secure, and cutting-edge software solutions. NanoMatrix Technologies Limited is a pioneer in introducing advanced authentication, document protection, traceability, and software solutions to combat cyber crimes, counterfeiting, and privacy breaches. Our secure, data-protected, standardized, and innovative technologies help safeguard brands, products, solutions, and documents from unauthorized reproduction and duplication.

NanoMatriX Technologies Limited is committed to the highest standards of cyber security, data privacy protection, and quality management. Our systems are certified and compliant with leading international standards, including:

- ISO 27001: Ensuring robust Information Security Management Systems (ISMS).

- ISO 27701: Upholding Privacy Information Management Systems (PIMS) for effective data privacy.

- ISO 27017: Implementing ISMS for cloud-hosted systems, ensuring cybersecurity in cloud environments.

- ISO 27018: Adhering to PIMS for cloud-hosted systems, emphasizing privacy in cloud-hosted services.

- ISO 9001: Demonstrating our commitment to Quality Management Systems, and delivering high-quality solutions.

Read the fourth blog post for NanoMatriX’s AI Learning and Awareness series here.

Recent Comments